Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

13: Twin Interface Problems

\def \ititle {13: Twin Interface Problems}

\begin{center}

{\Large

\textbf{\ititle}

}

\iemail %

\end{center}

\section{An Interface Problem: Preferences}

‘we should search in vain among the literature for a consensus about the

psychological processes by which

primary motivational states,

such as hunger and thirst,

regulate simple goal-directed [i.e. instrumental] acts’

(Dickinson & Balleine, 1994 p. 1)

An interface problem ...

‘we should search in vain among the literature for a consensus about the psychological processes by which primary motivational states, such as hunger and thirst, regulate simple goal-directed [i.e. instrumental] acts’

\citep[p.~1]{dickinson:1994_motivational}

Dickinson & Balleine, 1994 p. 1

This is a very basic question.

Why do you go to the kitchen and press the lever to get some water

when you are thirsty?

An Interface Problem:

How are non-accidental matches possible?

Primary motivational states guide some actions.

Preferences guide some actions.

Pursuing a single goal can involve both kinds of state.

As in the case of lever pressing then magazine entry to get the sugar solution.

(Not demonstrated in this talk.)

Primary motivational states can differ from preferences.

Two motivational states match

in a particular context

just if, in that context,

the actions one would cause and the actions the other would cause

are not too different.

... an interface problem where there are two sets of preferences.

Here there’s conversation to sort them out

(although that doesn’t work so well)

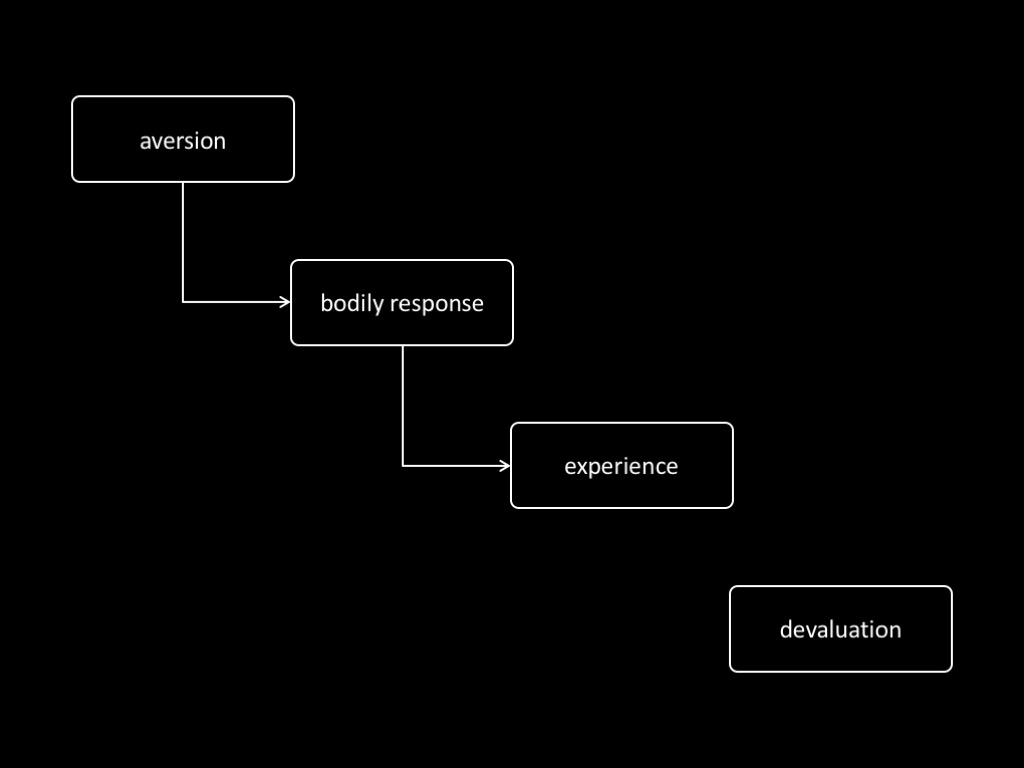

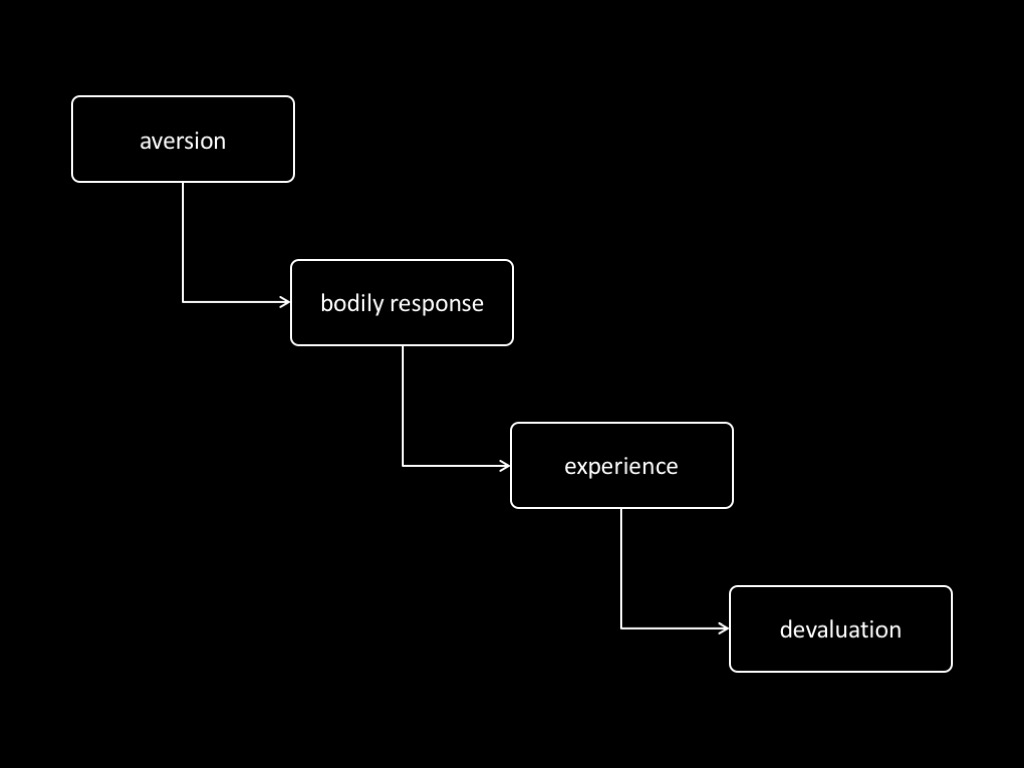

Experience is key ...

‘primary motivational states, such as hunger, do not determine the value of an instrumental goal directly;

rather, animals have to learn about the value of a commodity in a particular motivational state through direct experience with it in that state’

\citep[p.~7]{dickinson:1994_motivational}

‘primary motivational states have no direct impact on the current value that an

agent assigns to a past outcome of an instrumental action; rather, it appears

that agents have to learn about the value of an outcome through direct experience with

it, a process that we refer to as \emph{incentive learning}’

\citep[p.~8]{dickinson:1994_motivational}

Dickinson & Balleine , 1994 p. 7

A role for experience in solving the interface problem.

Why are rats (and you) aware of bodily states such as hunger and revulsion?

Because this awareness enables your preferences to be coupled,

but only losely,

to your primary motivational states.

Isn’t it redunant to have dissociable kinds of motivational state?

‘the motivational control over goal-directed actions is, at least in part,

indirect and mediated by learning about one's own reactions to primary

incentives.

By this process [...], goal-directed actions are liberated from the tyranny of primary motivation’

\citep[p.~16]{dickinson:1994_motivational}

Dickinson & Balleine , 1994 p. 16

Another Interface Problem: Action

\section{Another Interface Problem: Action}

For a single action, which outcomes it is directed to may be multiply

determined by an intention and, seemingly independently, by a motor

representation. Unless such intentions and motor representations are to pull

an agent in incompatible directions, which would typically impair action

execution, there are requirements concerning how the outcomes they represent

must be related to each other. This is the interface problem: explain how any

such requirements could be non-accidentally met.

The interface problem: explain how intentions and motor representations, with their distinct

representational formats, are related in such a way that, in at least some cases, the outcomes they

specify non-accidentally match.

‘both mundane cases of action slips and pathological conditions, such as apraxia or anarchic hand

syndrome (AHS), illustrate the existence of an interface problem’

\citep[p.~7]{mylopoulos:2016_intentions}.

Two collections of outcomes, A and B, \emph{match} in a particular context just if, in that context,

either the occurrence of the A-outcomes would normally constitute or cause, at least partially, the

occurrence of the B-outcomes or vice versa. To illustrate, one way of matching is for the B-outcomes

to be the A-outcomes. Another way of matching is for the B-outcomes to stand to the A-outcomes as

elements of a more detailed plan stand to those of a less detailed one.

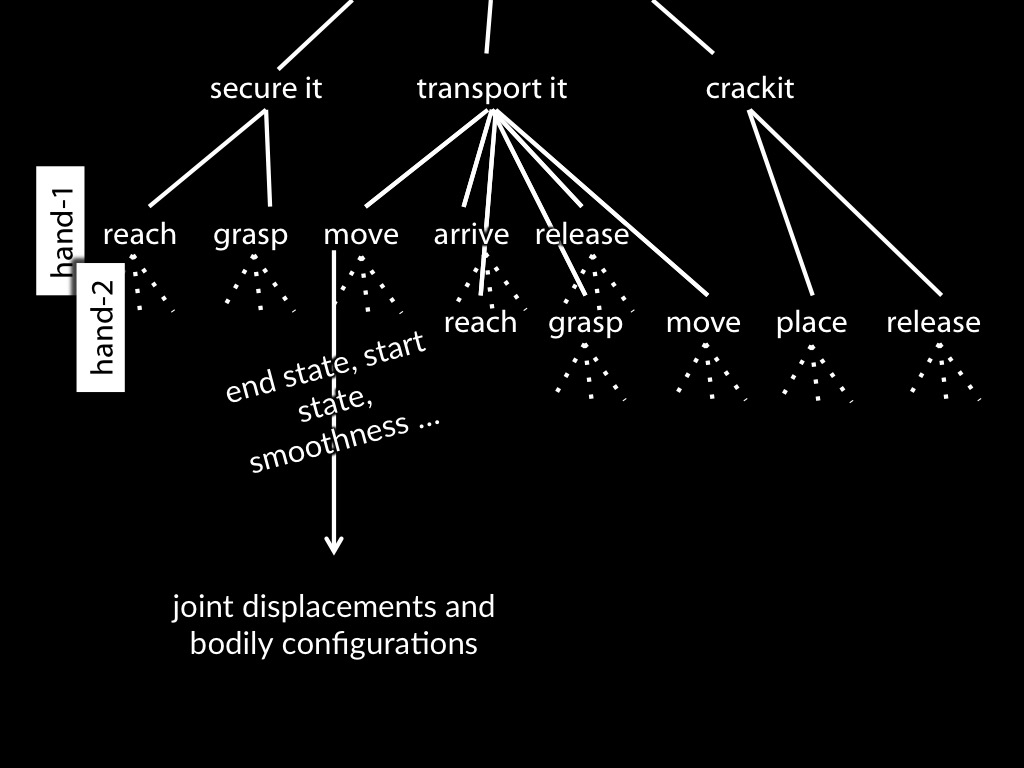

Imagine that you are strapped to a spinning wheel facing near certain death as it plunges you into

freezing water. To your right you can see a lever and to your left there is a button. In deciding

that pulling the lever offers you a better chance of survival than pushing the button, you form an

intention to pull the lever, hoping that this will stop the wheel. If things go well, and if

intentions are not mere epiphenomena, this intention will result in your reaching for, grasping and

pulling the lever. These actions---reaching, grasping and pulling---may be directed to specific

outcomes in virtue of motor representations which guide their execution. It shouldn't be an accident

that, in your situation, you both intend to pull a lever and you end up with motor representations

of reaching for, grasping and pulling that very lever, so that the outcomes specified by your

intention match those specified by motor representations. If this match between outcomes variously

specified by intentions and by motor representations is not to be accidental, what could explain it?

The Interface Problem:

How are non-accidental matches possible?

Motor representations specify goals.

As we have just seen, motor representations specify goals.

Intentions specify goals.

And of course, so do intentions.

Some actions involve both intention and motor representation.

Further, many actions involve both intention and motor representation.

When, for example, you form an intention to turn the lights out, the goal

of flipping the light switch may be represented motorically in you.

The nonaccidental success of our actions therefore depends on the outcomes

specified by our intentions and motor representations matching.

Intention and motor representation are not inferentially integrated (because representational format?).

Two outcomes, A and B, match in a particular context just if, in that context,

either the occurrence of A would normally constitute or cause, at least

partially, the occurrence of B or vice versa.

But how should they match?

I think they should match in this sense:

the occurrence of the outcome specified by the motor representation would

would normally constitute or cause, at least

partially, the occurrence of the outcome specified by the intention.

Now we have to ask, How are nonaccidental matches possible?

If you asked a similar question about desire and intention, the answer would be

straightforward: desire and intention are integrated in practical reasoning, so it

is no surprise that what you intend sometimes nonaccidentally conforms to what you intend.

But we cannot give the same sort of answer in the case of motor representations and

intentions because ...

Intention and motor representation are not inferentially integrated.

Beliefs, desires and intentions are related to the premises and conclusions

in practical reasoning. Motor representations are not.

Similarly, intentions do not feature in motor processes.

Failure of inferential integration follows from the claim that they differ in

format and are not translated. But I suspect that more people will agree that there is a

lack of inferential integration than that they differ in representational format.

(must illustrate format with maps).

So this is the Interface Problem: how do the outcomes specified by intentions and

motor representations ever nonaccidentally match?

‘both mundane cases of action slips and pathological conditions, such as apraxia or anarchic hand syndrome (AHS), illustrate the existence of an interface problem.’

Mylopoulos and Pacherie (2016, p. 7)

Having an intention is neither necessary nor sufficient.

Jeannerod 2006, p. 12:

‘the term apraxia was coined by Liepmann to account for higher order motor

disorders observed in patients who, in spite of having no problem in executing

simple actions (e.g. grasping an object), fail in actions involving more

complex, and perhaps more conceptual, representations.’

Can people with ideomotor apraxia form intentions?

\citep[p.~7]{mylopoulos:2016_intentions}: ‘Typically the result of lesion

to SMA or anterior corpus callosum, AHS is a condition in which patients

perform complex, goal-oriented movements with their cross-lesional limb

that they feel unable to directly inhibit or control. The limb is often

disproportionately reactive to environmental stimuli, carrying out

habitual behaviors that are inappropriate to the context, e.g., grabbing

food from a dinner companion’s plate (Della Sala 2005, 606). It is clear

from many of the behaviors observed in these cases that the anarchic limb

fails to hook up with the agent’s intentions.’

In the rest of this talk I’m not going to suggest a solution to the interface problem.

Instead, I want to mention some considerations

which may complicate attempts to solve it.

\section{Five Complications}

Any attempt to solve the interface problem must surmount at least five complications.

five complications

This isn’t a preliminary to my talk; although I will say something about how to solve

the interface problem right at the end, I’m mainly concerned to persuade you that

the interface problem is tricky to solve.

1

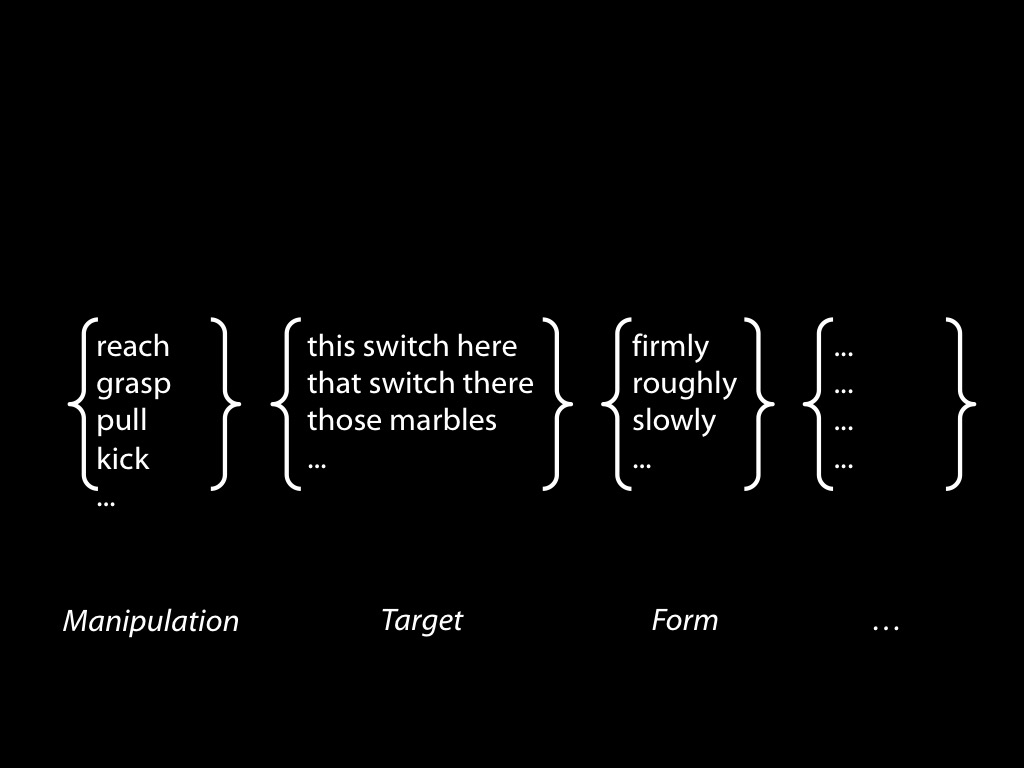

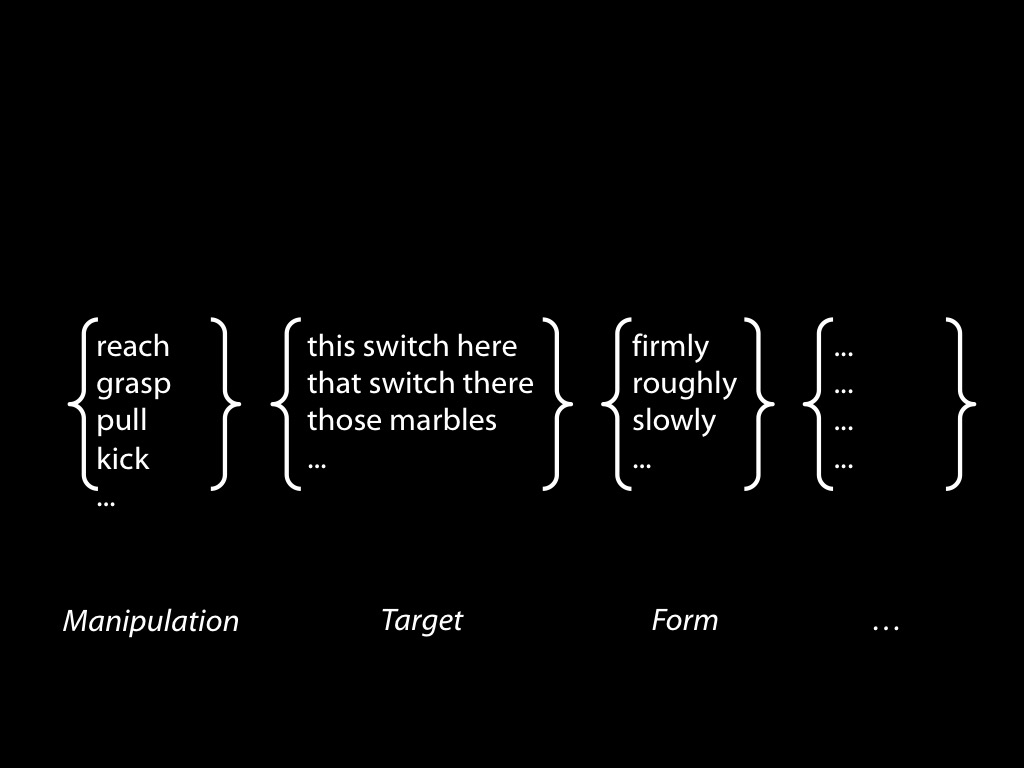

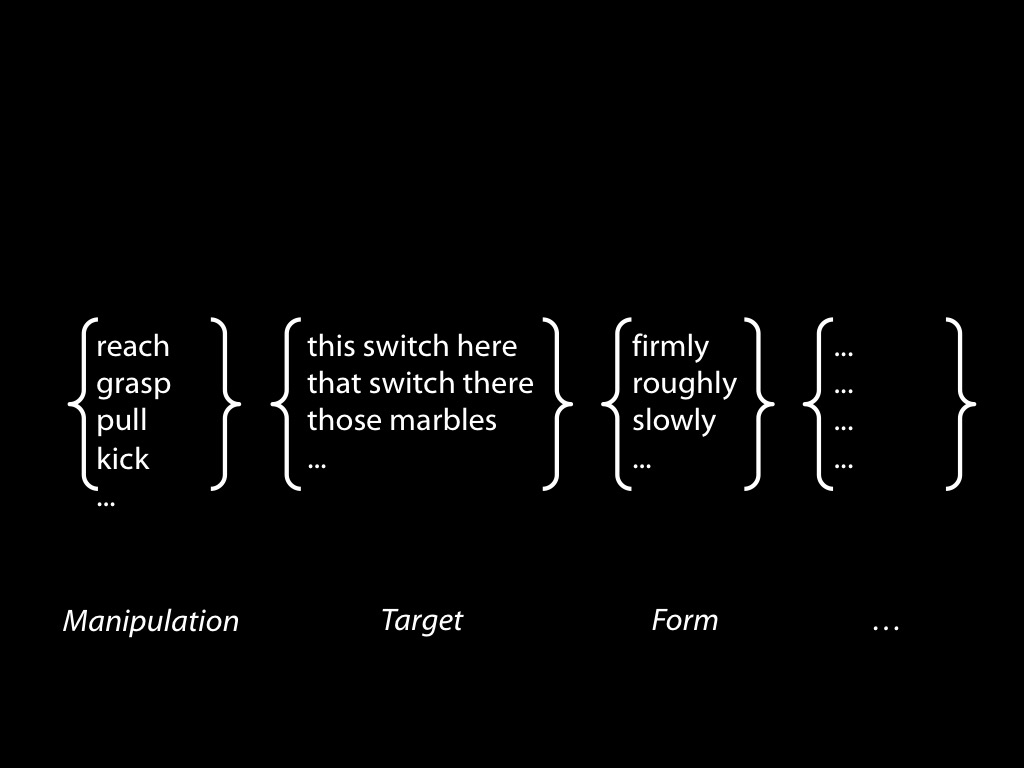

First consideration which complicates the interface problem: outcomes have a complex anatomy

comprising manipulation, target, form and more.

There is evidence that each of these can be represented motorically; and of course these

can all be specified by intentions too.

On the targets of actions, as Elisabeth has stressed,

motor representations represent not only ways of acting but also targets on which actions

might be performed and some of their features related to possible action outcomes involving

them (for a review see Gallese & Sinigaglia 2011; for discussion see Pacherie 2000, pp.

410-3). For example visually encountering a mug sometimes involves representing features

such as the orientation and shape of its handle in motor terms (Buccino et al. 2009;

Costantini et al. 2010; Cardellicchio et al. 2011; Tucker & Ellis 1998, 2001).

One possibility is that the Interface Problem breaks down into questions corresponding

to these three different components of outcomes. That is, an account of how the manipuations

specified by intentions and motor representations nonaccidentally match might end up being

quite different from an account of how the targets or forms match.

(I’m not saying this is right, just considering the possibility.)

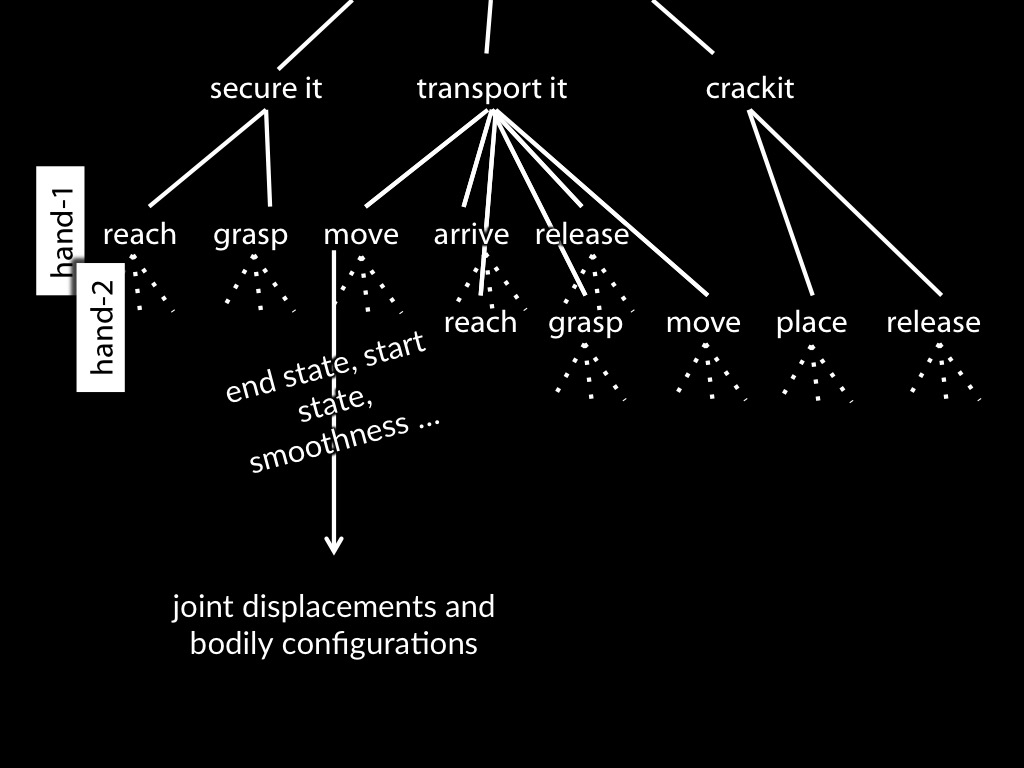

Second consideration which complicates the interface problem: scale.

This shows that we can’t think of the interface problem merely as a way of intentions

setting problems to be solved by motor representations: there may be multiple intentions

at different scales, and in some cases an intention may operate at a smaller scale than

a motor representation.

Suppose you have an intention to tap in time with a metronome.

Maintaining synchrony will involve two kinds of correction: phase and period shifts.

These appear appear to be made by mechanisms acting independently, so that correcting errors involves a distinctive pattern of overadjustment.

Adjustments involving phase shifts are largely automatic, adjustments involving changes in period are to some extent controlled.

How are period shifts controlled?

Importantly this is not currently known.

One possibility is that period adjustments can be made intentionally \citep[as][p.~2599 hint]{fairhurst:2013_being};

another is that there are a small number of ‘coordinative strategies’ \citep{repp:2008_sensorimotor} between which agents with sufficient skill can intentionally switch in something like the way in which they can intentionally switch from walking to running.

But either way, there can be two intentions: a larger-scale one to tap in time with a metronome

and a smaller-scale one to adjust the tapping which results in a period shift.

EP: Skilled piano playing means being able to have intentions with respect to larger units than a

novice could manage. But in playing a 3-voice fugue you may need to pay attention to a particular

nger in order to keep the voices separate. So you need to be able to attend to both ‘large chunks’

(e.g. chords) of action and ‘small chunks’ (e.g. keypresses) simultaneously.

BACKGROUND:

Because no one can perform two actions without introducing some tiny variation between them, entrainment of any kind depends on continuous monitoring and ongoing adjustments \citep[p.~976]{repp:2005_sensorimotor}.

% \textcite[p.~976]{repp:2005_sensorimotor}: ‘A fundamental point about SMS is that it cannot be sustained without error correction, even if tapping starts without any asynchrony and continues at exactly the right mean tempo. Without error correction, the variability inherent in any periodic motor activity would accumulate from tap to tap, and the probability of large asynchronies would increase steadily (Hary & Moore, 1987a; Voillaume, 1971; Vorberg & Wing, 1996). The inability of even musically trained participants to stay in phase with a virtual metronome (i.e., with silent beats extrapolated from a metronome) can be demonstrated easily in the synchronization–continuation paradigm by computing virtual asynchronies for the continuation taps. These asynchronies usually get quite large within a few taps, although occasionally, virtual synchrony may be maintained for a while by chance.’

% \citet[p.~407]{repp:2013_sensorimotor}: ‘Error correction is essential to SMS, even in tapping with an isochronous, unperturbed metronome.’

One kind of adjustment is a phase shift, which occurs when one action in a sequence is delayed or brought forwards in time.

Another kind of adjustment is a period shift; that is, an increase or reduction in the speed with which all future actions are performed, or in the delay between all future adjacent pairs of actions.

These two kinds of adjustment,

phase shifts and period shifts,

appear to be made by mechanisms acting independently, so that correcting errors involves a distinctive pattern of overadjustment.%

\footnote{%

See \citet[pp.~474–6]{schulze:2005_keeping}. \citet{keller:2014_rhythm} suggest, further, that the two kinds of adjustment involve different brain networks.

Note that this view is currently controversial: \citet{loehr:2011_temporal} could be interpreted as providing evidence for a different account of how entrainment is maintained.

}

\citet[p.~987]{repp:2005_sensorimotor} argues, further, that while adjustments involving phase shifts are largely automatic, adjustments involving changes in period are to some extent controlled.

% (‘two error correction processes, one being largely automatic and operating via phase resetting, and the other being mostly under cognitive control and, presumably, operating via a modulation of the period of an internal timekeeper’ \citep[p.~987{repp:2005_sensorimotor})

One possibility is that period adjustments can be made intentionally \citep[as][p.~2599 hint]{fairhurst:2013_being};

another is that there are a small number of ‘coordinative strategies’ \citep{repp:2008_sensorimotor} between which agents with sufficient skill can intentionally switch in something like the way in which they can intentionally switch from walking to running.

2

So it is not that intentions are restricted to specifying outcomes which form the head of the means-end

hierarchy of outcomes represented motorically.

They can also influence aspects of outcomes at smaller scales.

Third consideration which complicates the interface problem: dynamics.

It’s ‘not just how motor representations are triggered by intentions, but how motor

representations’ sometimes nonaccidentally continue to match intentions as circumstances change in unforeseen ways ‘throughout

skill execution’

\citep[p.~19]{fridland:2016_skill}.

Fridland, 2016 p. 19

Here we need to distinguish different kinds of change.

Some changes can be flexibly accommodated motorically without any need for intention

to be involved, or even for the agents to be aware of the change. This includes peturbations

in the apparent direction of motion while drawing \citep{fourneret:1998_limited}.

But other changes may require a change in intention: circumstances may change in such a way

that you wish either to abandon the action altogether, or else switch target midway through.

\textbf{KEY}: in executing an intention you may learn something which causes you to change

your intention; for example, you may learn that the action is just too awkward, or that the

ball is out of reach. So motor processes can result in discoveries that nonaccidentally cause

changes in intention.

This also shows that we can’t think of the interface problem merely as a way of intentions

‘handing off’ to motor representations: in some cases, the matching of motor representations

and intentions will nonaccidentally persist.

unidirectional bidirectional

These reflections on dynamics (and on scale too[?]) suggest that the interface problem

is not a unidirectional but a bidirectional one. The agent who intends probably cannot be blind to

the ways in which motor representations structure her actions since the structure is both provides

and limits opportunities for interventions.

4

The interface problem is the problem of explaining how there could be nonaccidental matches.

But there is a related developmental problem: What is the process by which humans acquire

abilities to ensure that their intentions and motor representations sometimes nonaccidentally

match?

A solution to the interface problem must provide a framework for answering the corresponding

question about development.

Imagination: intentions and motor representations can nonaccidentally match not only

when we are acting but also when we are merely imagining acting.

Mylopoulos and Pacherie’s Proposal

\section{Mylopoulos and Pacherie’s Proposal}

Mylopoulos and Pacherie propose that the Interface Problem can be solved

by appeal to executable action concepts.

This is perhaps also a promising idea for tacking the New Interface Problem too.

‘As defined by Tutiya et al., an executable concept of a type of movement is a

representation, that could guide the formation of a volition, itself the proximal cause of

a corresponding movement. Possession of an executable concept of a type of movement thus

implies a capacity to form volitions that cause the production of movements that are

instances of that type.’

\citep[p.~7]{pacherie:2011_nonconceptual}

Mylopoulos and Pacherie:

‘an executable [action] concept of a type of movement is a representation, that could guide the formation of a volition, itself the proximal cause of a corresponding movement.

Possession of an executable concept of a type of movement thus implies a capacity to form volitions that cause the production of movements that are instances of that type.’

There explanation: two different kinds of action concept, one purely

observational.

‘For instance, most of us have a concept of ‘tail wagging’ that we can deploy when we

judge, for instance, that Julius the dog is wagging his tail. Or if you are not

convinced that tail wagging constitutes purposive behavior, consider the action concept

‘tail swinging’, as in ‘cows constantly swing their tails to flick away flies’.’

Pacherie 2011, p. 8

Objections:

(i) It looks like the executable action concept builds in a solution to the interface problem

rather than solving it.

The executable action concept is a ready-made solution.

To answer this objection, we need an account of how concepts and motor representations

become bound together.

Simple theory of executable action concepts:

there are concept--motor schema associations.

REPLY: Simple theory of executable action concepts: they’re just associations between

concepts and motor schema (partially specified outcomes that are represented motorically).

[Objections (ctd):

(ii) Fridland: there is a dynamic component to the interface problem.

An an answer to it must specify ‘not just how motor representations are triggered by

intentions, but how motor representations’ continue to match intentions as circumstances

change in unforeseen ways ‘throughout skill execution’ (Fridland, 2016 p. 19).]

(iii) We need to understand how there is matching not only concerning how you act

(push vs pull, say) but also on which object you act (this switch or that one, say).

On (iii), note that, as Elisabeth has stressed,

motor representations represent not only ways of acting but also objects on which actions

might be performed and some of their features related to possible action outcomes involving

them (for a review see Gallese & Sinigaglia 2011; for discussion see Pacherie 2000, pp.

410-3). For example visually encountering a mug sometimes involves representing features

such as the orientation and shape of its handle in motor terms (Buccino et al. 2009;

Costantini et al. 2010; Cardellicchio et al. 2011; Tucker & Ellis 1998, 2001).

Executable action concepts might work for manipulation and form, but are less likely to work

for tagets since there are just so many of them.

On the other hand, we might think of the interface problem as having many aspects so that

we don't solve it all at once.

Further issues:

(iv) knowledge of action: shouldn’t the relation should be such as to allow someone who uses the

concept to know which action she is thinking about.

(v)

So I think that while there’s much more to be done in thinking through Myrto and Elisabeth’s

proposal about executive action concepts, this is a step forwards but not necessarily a full

solution to the interface problem.

What other resources are available?

A Puzzle about Thought, Experience and the Motoric

\section{A Puzzle about Thought, Experience and the Motoric}

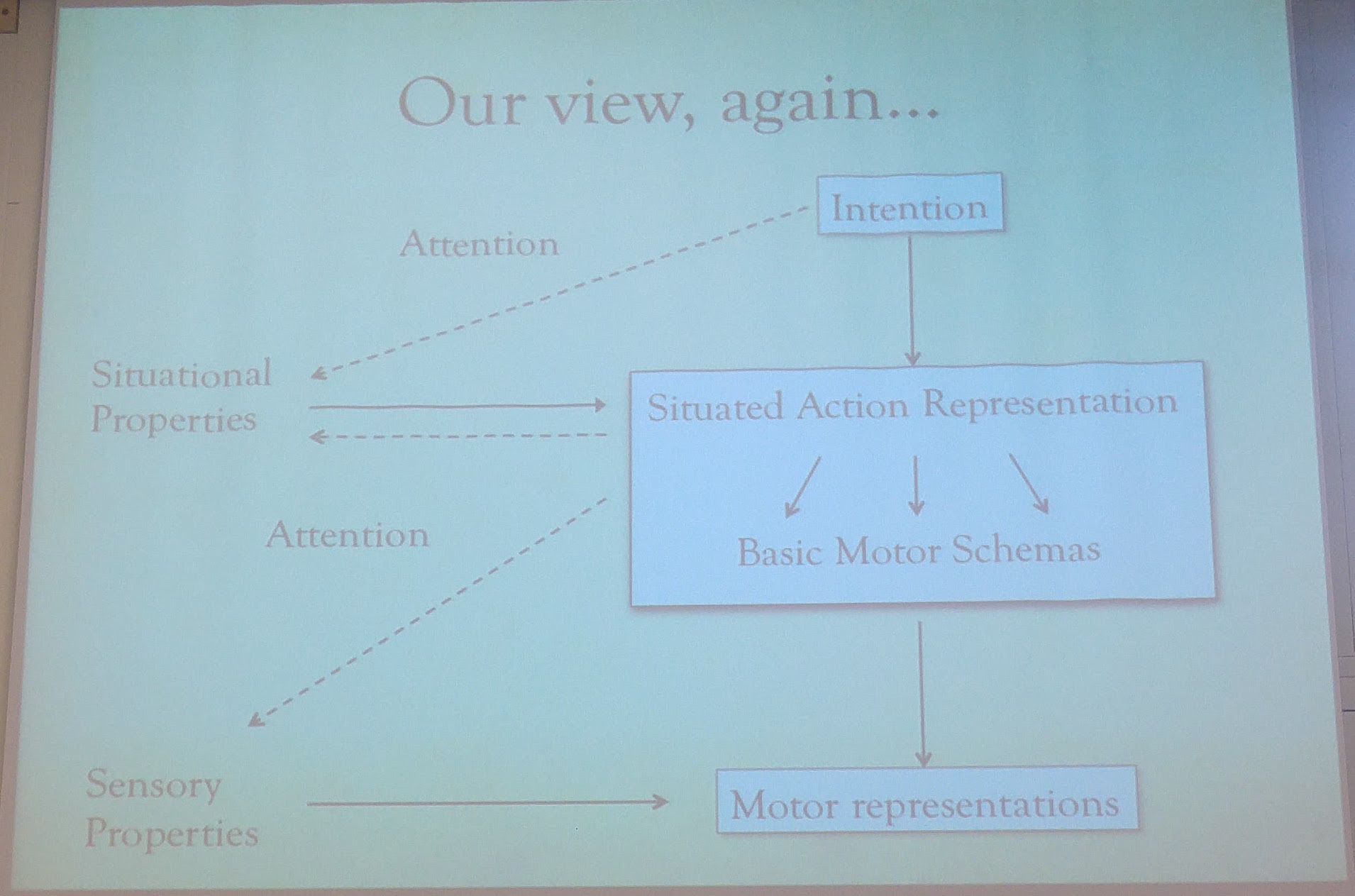

Motor representations occur when merely observing others act and sometimes influence thoughts about the

goals of observed actions. Further, these influences are content-respecting: what you think about an

action sometimes depends in part on how that action is represented motorically in you. The existence of

such content-respecting influences is puzzling. After all, motor representations do not feature

alongside beliefs or intentions in reasoning about action; indeed, thoughts are inferentially isolated

from motor representations. So how could motor representations have content-respecting influences on

thoughts?

In action observation, motor representations of outcomes ...

... underpin goal-tracking, and

sometimes facilitate the identification of goals in thought.

So

where motor representations influence a thought about an action being directed to a particular outcome, there is normally a motor representation of this outcome, or of a matching outcome.

This conclusion entails that motor representations have content-respecting

influences on thoughts. It is the fact that one outcome rather than another is represented

motorically which explains, at least in part, why the observer takes this outcome (or a matching

one) to be an outcome to which the observed action is directed.

But

how could motor representations have content-respecting influences on thoughts given their inferential isolation?

But how could motor representations

have content-respecting influences on thoughts? One familiar way to explain content-respecting

influences is to appeal to inferential relations. To illustrate, it is no mystery that your beliefs

have content-respecting influences on your intentions, for the two are connected by processes of

practical reasoning. But motor representation, unlike belief and intention, does not feature in

practical reasoning. Indeed, thought is inferentially isolated from it. How then could any motor

representations have content-respecting influences on thoughts?

motor representation -> experience of action -> thought

In something like the way experience may tie thoughts about seen objects to the representations

involved in visual processes, so also it is experience that connects what is represented

motorically to the objects of thought.

[significance]

This may matter for understanding thought about action. On the face of it, the inferential

isolation of thought from motor representation makes it reasonable to assume that an account of

how humans think about actions would not depend on facts about motor representation at all. But

the discovery that motor representations sometimes shape experiences revelatory of action

justifies reconsidering this assumption. It is plausible that people sometimes have reasons for

thoughts about actions, their own or others', that they would not have if it were not for their

abilities to represent these actions motorically. To go beyond what we have considered here, it

may even turn out that an ability to think about certain types of actions depends on an ability

to represent them motorically.

[consequence]

One consequence of our proposal concerns how experiences of one's own actions relate to

experiences of others' actions. For almost any action, performing it would typically involve

receiving perceptual information quite different to that involved in observing it. This may

suggest that experiences involved in performing a particular action need have nothing in common

with experiences involved in observing that action. However, two facts about motor

representation, its double life and the way it shapes experience, jointly indicate otherwise. For

actions directed to those goals that can be revealed by experiences shaped by motor

representations, there are plausibly aspects of phenomenal character common to experiences

revelatory of one's own and of others' actions. In some respects, what you experience when others

act is what you experience when you yourself act.

The claim that there is expeirence of action is based on an earlier argument.

I now want to review and then object to that argument.

(The conclusion may be correct, but the argument does not establish it.)

Puzzle

How could motor representations have content-respecting influences on thoughts given their inferential isolation?

The Twin Interface Problems

\section{The Twin Interface Problems}

How could intentions have content-respecting influences on motor representations given their inferential isolation?

And how could motor representations have content-respecting influences on thoughts given their inferential isolation?

So here are my two puzzles ...

The first one comes straight from lecture 01; the second is new, but based on

ideas discussed in Lecture 02.

Interface Problem

intention -> motor representation

How could intentions have content-respecting influences on motor representations given their inferential isolation?

New Interface Problem

motor representation -> judgement

How could motor representations have content-respecting influences on thoughts given their inferential isolation?

\section{Appendix: Representational Format}

As background we first need a generic distinction between content and format.

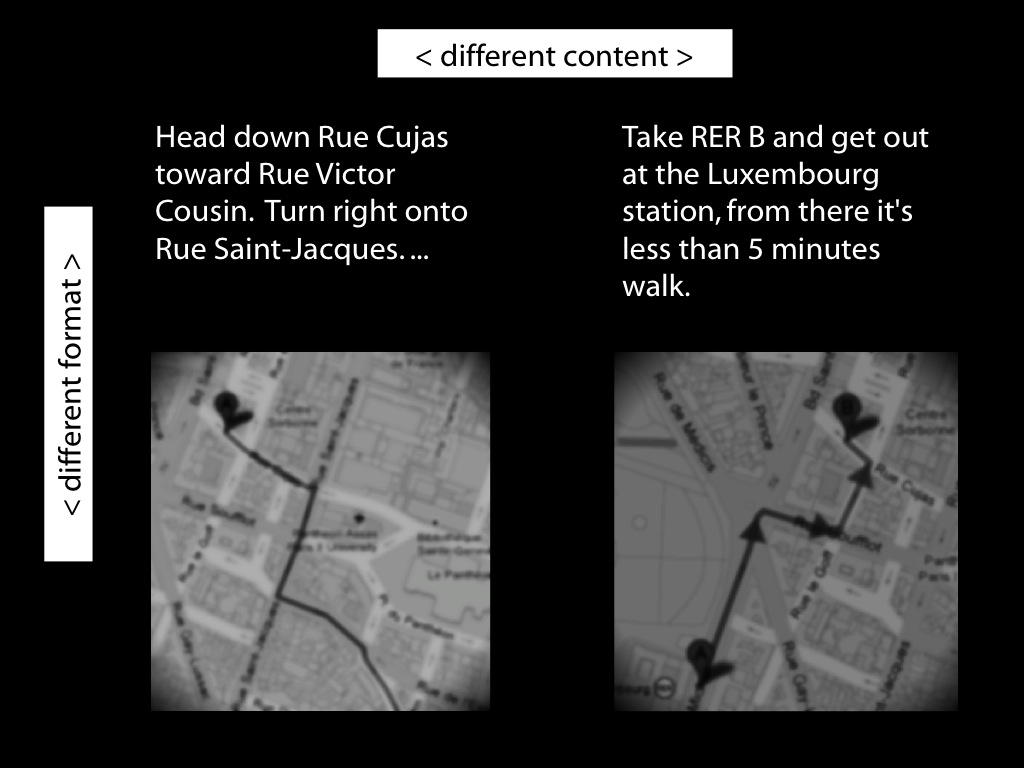

Imagine you are in an unfamiliar city and are trying to get to the central station.

A stranger offers you two routes. Each route could be represented by a distinct line

on a paper map. The difference between the two lines is a difference in content.

Each of the routes could alternatively have been represented by a distinct series

of instructions written on the same piece of paper; these cartographic and

propositional representations differ in format. The format of a representation

constrains its possible contents. For example, a representation with a cartographic

format cannot represent what is represented by sentences such as `There could not be a

mountain whose summit is inaccessible.'\footnote{ Note that the distinction between

content and format is orthogonal to issues about representational medium. The maps in

our illustration may be paper map or electronic maps, and the instructions may be spoken,

signed or written. This difference is one of medium.} The distinction between content and

format is necessary because, as our illustration shows, each can be varied independently

of the other.

Format matters because only where two representations have the same format can they be straightforwardly inferentially integrated.

To illustrate, let’s stay with representations of routes.

Suppose you are given some verbal instructions describing a route. You are then shown a representation of a route on a map and asked whether this is the same route that was verbally described. You are not allowed to find out by following the routes or by imagining following them.

Special cases aside, answering the question will involve a process of translation because two distinct representational formats are involved, propositional and cartographic. It is not be enough that you could follow either representation of the route. You will also need to be able to translate from at least one representational format into at least one other format.

Think of predictive coding.

Think of CTM.

Think of any account of the mind you like.

I bet that the only way that non-accidental matches can occur

on the account is through a process of inference.

Differences in representational format

block inferential integration

(without translation),

and so create interface problems.

\citet[p.~2]{jackendoff:1996_architecture} proposes

‘a systemof interface modules. An interface module communicates between two

levels of encoding, say Ll and L2, by carrying a partial translation of information

in Ll form into information in L2 form.’

Translation might work in some cases

(maybe between phonology and syntax,

or between spatial and linguistic representation?).

But it does not appear to work for motivational states,

and I guess not for executive (Bach: effective) states either.

The mind is made up of lots of different, loosely

connected systems that work largely independently of each other. To a certain extent it’s fine for

them to go their own way; and of course since they all get the same inputs (what with being parts of

a single subject), there are limits on how separate the ways the go can be. Still, it’s often good

for them to be aligned, at least eventually.

But how are they ever nonaccidentally brought into alignment?

One function of experience

is to solve these problems.

Experience is what enables there to be nonaccidental eventual alignment of largely independent

cognitive systems. This is what experience is for.

Can we think along these lines in the case of action?

This was clear enough in Dickinson’s case.

But how could it work in the case intention vs motor representation?

Two complications make it appear difficlut ..